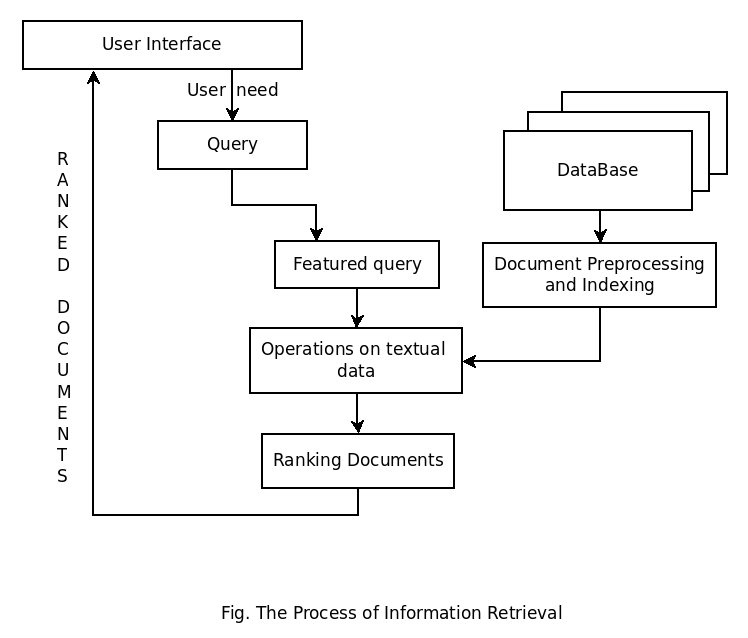

The Process of Information Retrieval

The working of Information Retrieval process is explained below

- The Process of Information Retrieval starts when a user creates any query into the system through some graphical interface provided.

- These user-defined queries are the statements of needed information. for example, queries fork by users in search engines.

- In IR single query does not match to the right data object instead it matches with the several collections of data objects from which the most relevant document is taken into consideration for further evaluation.

- The ranking of relevant documents is done to find out the most related document to the given query.

- This is the key difference between the Database searching and Information Retrieval.

- After the query is sent to the core of the system. This part has the access to the content management module which is directly linked with the back-end i.e. the large collections of data objects.

- Once results R are generated by the core system then it is returned to the user by some graphical user interfaces.

- The process repeats and results are modified by the user unless he gets what he is actually looking for.

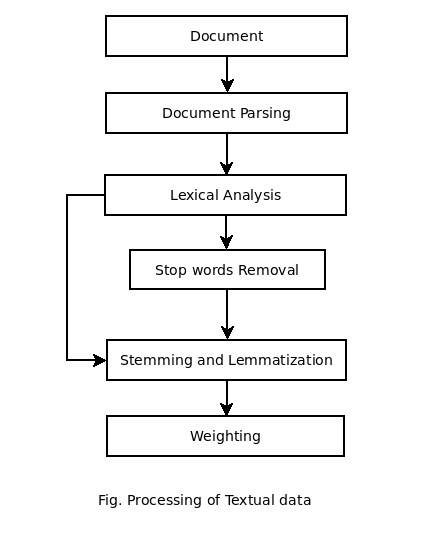

The Operations on Textual data of documents are illustrated in the figure below ->

The Operations on Textual data of documents are illustrated in the figure below ->

Above figure sketches the Processing of textual data typically performed by Information Retrieval engine, by taking a document as input and yielding its index terms.

1. Document Parsing The Documents comes from different source combinations such as multiple languages, formattings, character sets; normally, if any document consisting of more than languages. e.g. Consider a Spanish mail which has some part in the French language. Thus Document parsing deals with the overall document structure. In this phase, it breaks down the document into discrete components. In Preprocessing phase it creates unit documents, for example, one document representing emails and another as additional specific part.

2. Lexical Analysis In Lexical analysis, tokenization is the process of breaking a stream into words, phrases, symbols, or other meaningful terms called tokens. These meaningful elements are further sent to Parts of Speech Tagging. Typically, Tokenization occurs at a word level. 3. Stemming and Lemmatization In English grammar, for correct sentence structures, we often use different forms of any word. e.g. go, going, goes etc. Stemming is the process of cutting down the affixes and let the root word be found out. Any word is formed using regular noun + plural affix. check more on A Cognitive study of Lexicons - by Shirish Kadam Lemmatization usually refers to doing these things properly with Vocabulary and Morphological analysis of words. Aiming to remove inflectional endings only. Further Reading :

Comments

Post a Comment